Reduce ML inference costs on Amazon SageMaker for PyTorch models using Amazon Elastic Inference | AWS Machine Learning Blog

Sun Tzu's Awesome Tips On Cpu Or Gpu For Inference - World-class cloud from India | High performance cloud infrastructure | E2E Cloud | Alternative to AWS, Azure, and GCP

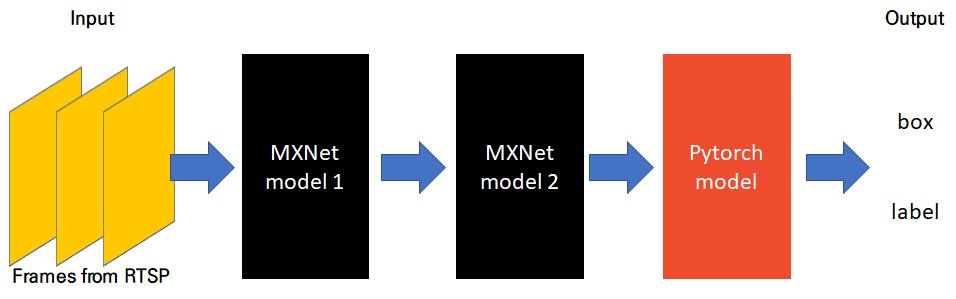

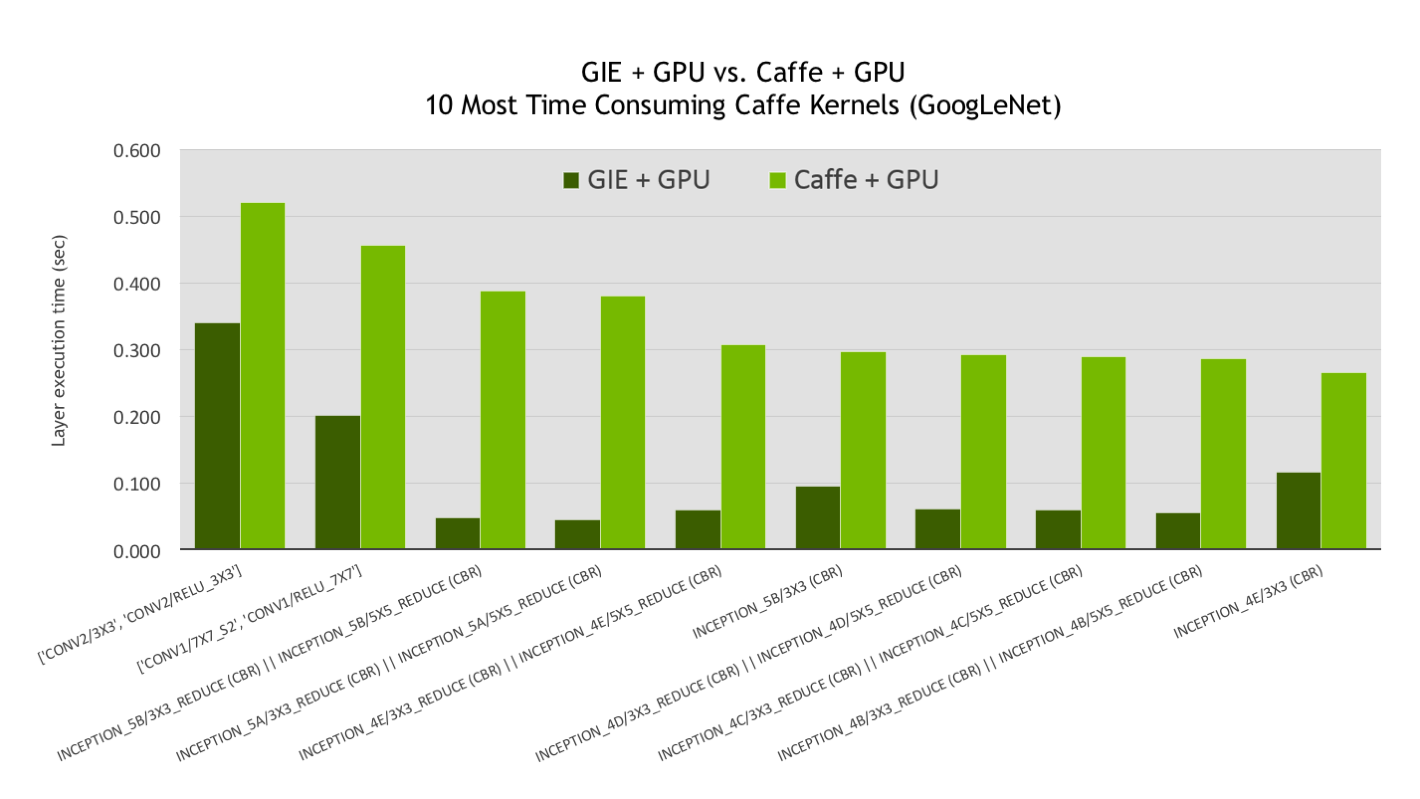

GPU-Accelerated Inference for Kubernetes with the NVIDIA TensorRT Inference Server and Kubeflow | by Ankit Bahuguna | kubeflow | Medium

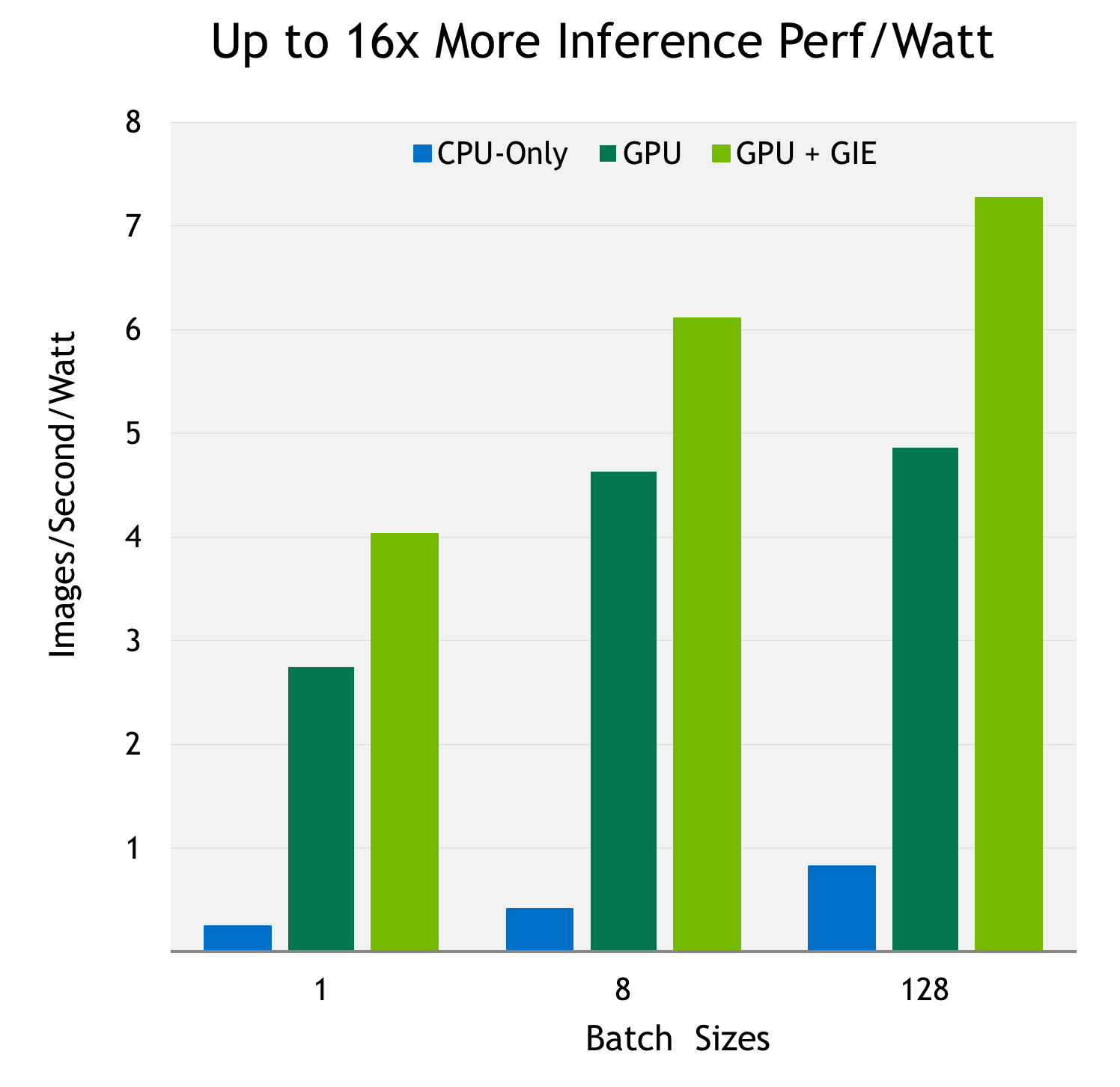

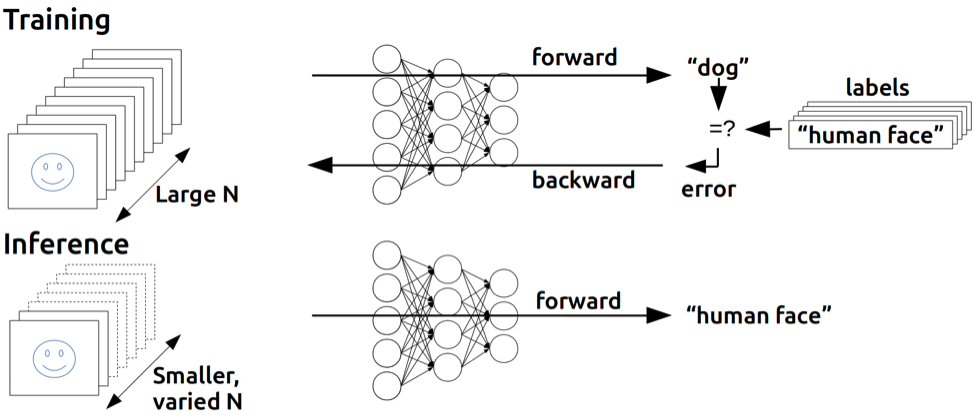

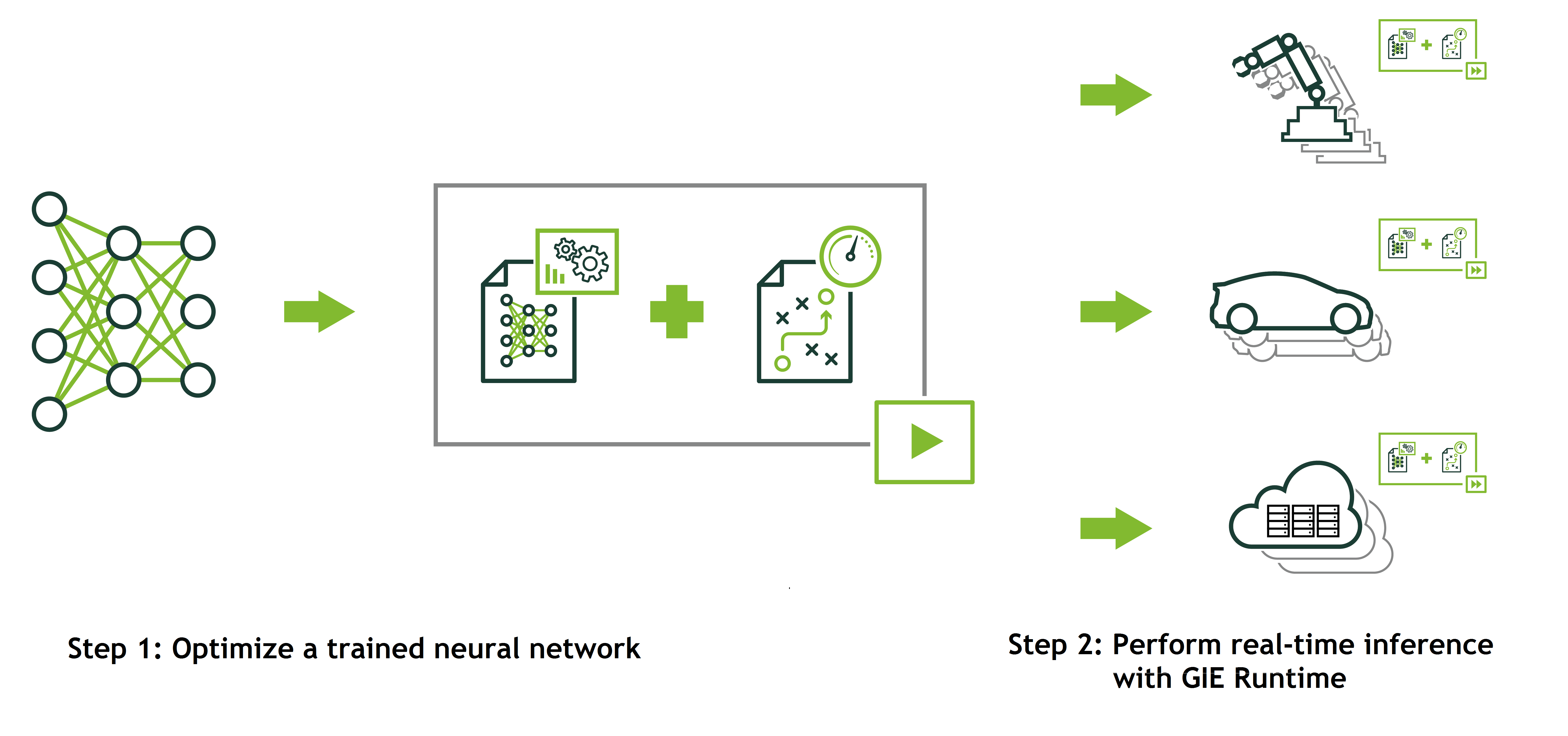

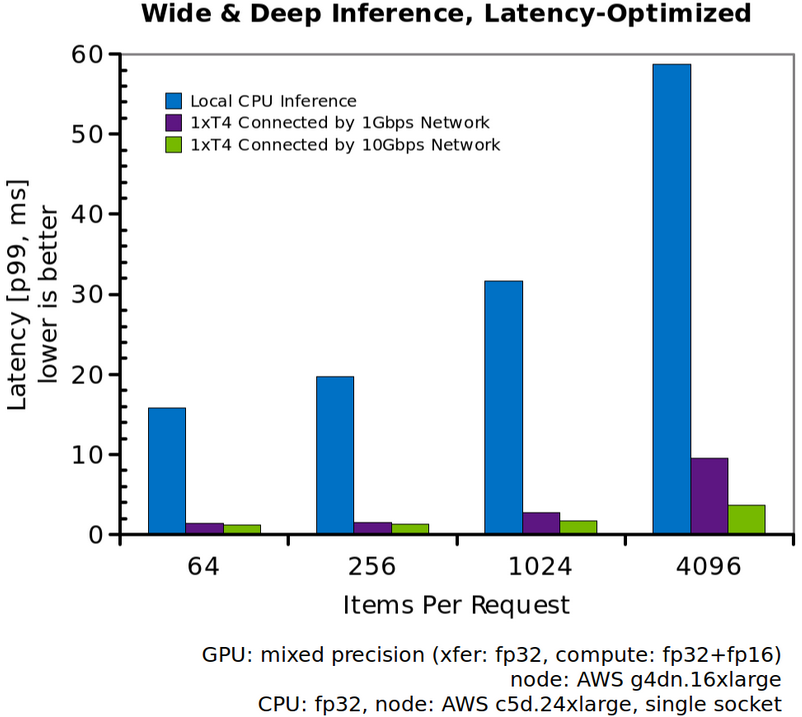

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

FPGA-based neural network software gives GPUs competition for raw inference speed | Vision Systems Design

Ο χρήστης Bikal Tech στο Twitter: "Performance #GPU vs #CPU for #AI optimisation #HPC #Inference and #DL #Training https://t.co/Aqf0UD5n7m" / Twitter

NVIDIA AI on Twitter: "Learn how #NVIDIA Triton Inference Server simplifies the deployment of #AI models at scale in production on CPUs or GPUs in our webinar on September 29 at 10am

Inference latency of Inception-v3 for (a) CPU and (b) GPU systems. The... | Download Scientific Diagram

The performance of training and inference relative to the training time... | Download Scientific Diagram

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research