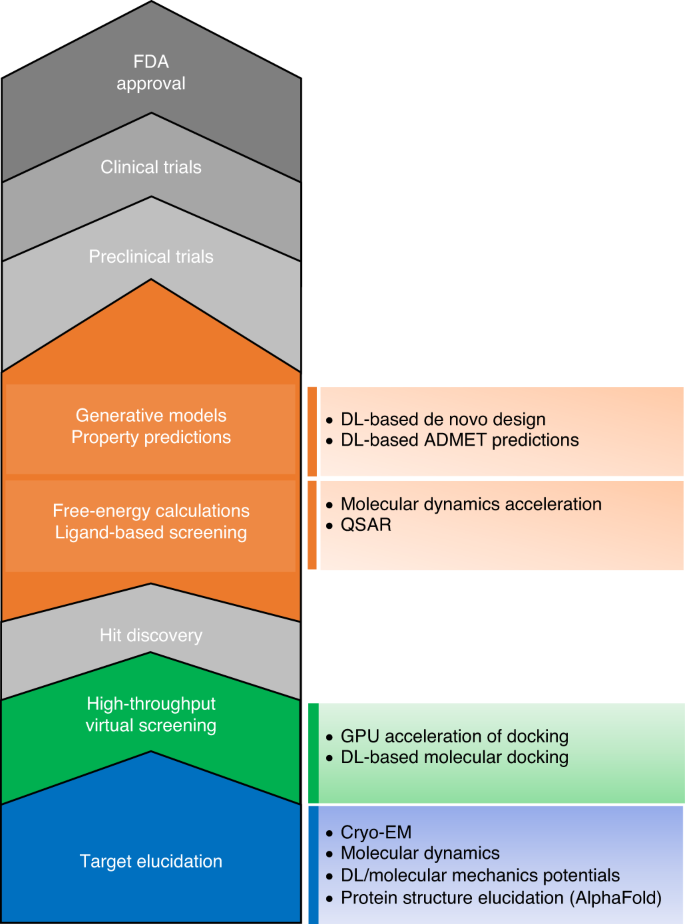

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

Register For Data Science Meetup: NVIDIA RAPIDS GPU-Accelerated Data Analytics & Machine Learning Workshop, 2nd Edition

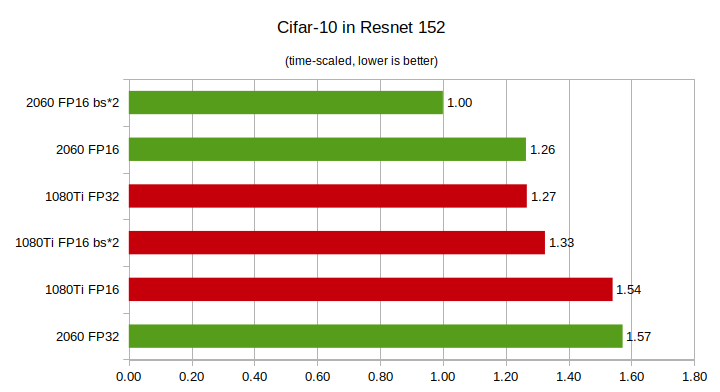

RTX 2060 Vs GTX 1080Ti Deep Learning Benchmarks: Cheapest RTX card Vs Most Expensive GTX card | by Eric Perbos-Brinck | Towards Data Science

Performance comparison of different GPUs and TPU for CNN, RNN and their... | Download Scientific Diagram

Paris, France - Feb 20, 2019: Man holding latest Nvidia Quadro RTX 5000 workstation professional video card GPU for professional CAD CGI scientific machine learning front view Stock Photo - Alamy

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science